Notes on LLMs in Biotech Automation

Of course LLM's have been the hot topic in computing for some time, and, as with any buzzy technology, people are trying it in lots of scenarios, including automation. The position I'm starting from is that it's extremely cool that we've figured out a way to turn semantic meaning into math and vice versa, but any application of that technology still needs to be proven - it's not a magic machine. I've seen a handful of demonstrations of "controlling a bioreactor with ChatGPT" on LinkedIn, and they all have two core problems:

- They're just a different way of sending controls signals to the reactor (e.g. turn on the base pump until pH = 5) and, in that sense, they are solving a solved problem.

- Even if they weren't, they aren't deterministic. The job of automation is to do some defined task safely, predictably, and reliably. In scenario where physical things are being controlled, I think it's a huge mistake to purposefully inject unpredictability into the system.

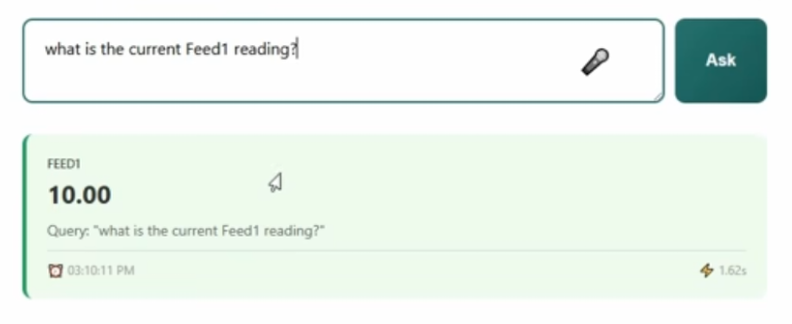

Friend to automation hackers everywhere Luis Villa gave us a talk[1] at Open Automation Club where he demonstrated the value he gets out of MCP interfaces with automation. This application makes sense to me - "Agentic" LLM's seem like they're very effective as human-machine interfaces. They let the user say "Please set my bioreactor to be at pH five", and the rest takes care of itself. The people in the automation world who swear by LLM tools seem to use it this way. Lab automation, especially, demands a lot of time integrating systems. If every system has a well-spec'd MCP server, then the LLM can take care of a lot of that effort, freeing the engineer from hours mired in technical docs.

Which leads to my concern: isn't this a form of deskilling? While natural language control is appealing to those with no training, it's remarkably inefficient compared to pressing a button or turning a dial. A trained operator of automated equipment will be faster and more accurate that an LLM with even moderately complex systems. I like the metaphor of playing a guitar: once you have a little training, you can play a song much faster than you can describe the notes in it. Maybe the LLM + MCP Agentic approach is lowering the barrier to entry, but lowering the performance ceiling at the same time.

This talk contained some sensitive information, so it's not posted on the YouTube channel. This happens with about 30% of OAC talks, so I encourage you to come to a meeting to get the skinny! ↩︎